Latest Articles

Discover insights, tutorials, and news about machine learning, technology, and more

Multi-armed bandit problem; Your First Reinforcement Learning Agent in 100 Lines of C

Read More

Explore cutting-edge AI, Machine Learning, and Computer Science insights, tutorials, and innovations. From deep learning to systems programming, we’re your gateway to the future of technology.

Neural networks, transformers, and computer vision

System design, architecture, and full-stack development

Fairness, transparency, and responsible innovation

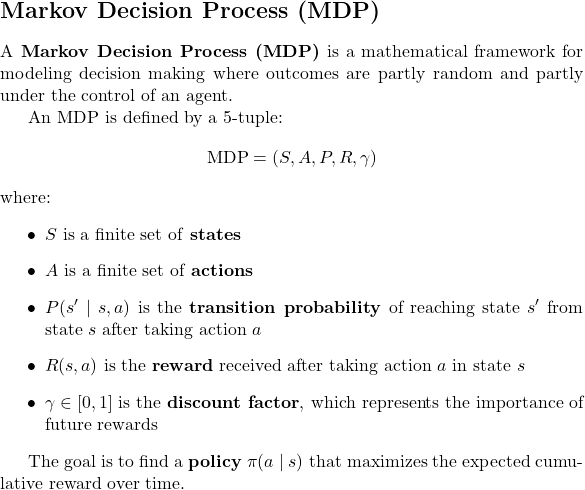

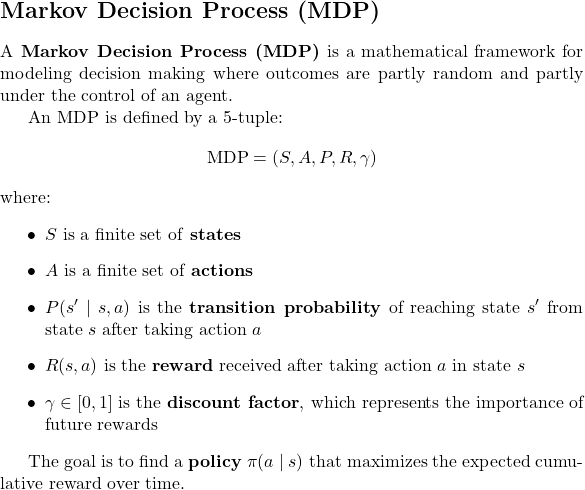

Intelligent systems and autonomous agents

Discover insights, tutorials, and news about machine learning, technology, and more

Find the perfect resources for your tech journey in our specialized topic collections

Dive into the world of algorithms, models, and AI innovations.

Check out our curated collection of top and trending articles.

Explore interactive neural networks, KNN visualizers, and AI learning tools

Discover the latest in front-end and back-end web development techniques.

Get the latest AI, Machine Learning, and Computer Science insights, tutorials, and industry news delivered straight to your inbox. Join thousands of tech enthusiasts staying ahead of the curve.

Get our latest technical deep-dives and AI/ML research insights every week.

Access subscriber-only AI/ML coding tutorials, model-building walkthroughs, and more.

Stay informed about the latest trends in artificial intelligence and computer science.

No spam, unsubscribe at any time. Your email is safe with us.