Have you ever tried to make multiple asynchronous API calls in your JavaScript code, only to overwhelm your servers or exceed rate limits? Or perhaps you needed to guarantee that a series of async tasks completed in a specific order?

Async queues are a simple but powerful tool that allow you to execute asynchronous JavaScript code in a controlled manner. They give you finer grained control over the concurrency, order, and errors of tasks.

With just a few lines of code, you can create queues that:

- Set a limit on the number of asynchronous tasks running concurrently, preventing overload

- Preserve the order of async operations even when using callbacks, promises, or async/await

- Retry failed operations without gumming up the rest of your code

- Update UI smoothly by prioritizing important user-facing tasks

You’ll learn step-by-step how to implement a basic yet flexible async queue in JavaScript. We’ll cover:

- The basics of enqueueing and processing queue items

- Controlling levels of concurrency

- Handling errors gracefully

- Some cool advanced use cases

So if you want to level up your async coding skills and smoothly coordinate complex flows of asynchronous code, read on! By the end, you’ll have a new async tool under your belt that makes taming asynchronicity a breeze.

What is an Async Queue?

A queue data structure that processes tasks asynchronously. It allows you to control concurrency and execute tasks in order. It is useful for handling async tasks like network requests without overloading a system

Why Async Queues Are Helpful

Async queues play a crucial role in optimizing workflows and resource utilization. Here are key reasons why leveraging async queues is beneficial:

- Avoid making too many async requests at once: Async queues help prevent the potential bottleneck that can occur when too many asynchronous requests are initiated simultaneously. By funneling tasks through a queue, you can control the rate at which requests are processed, avoiding overwhelming your system and ensuring a more stable and predictable operation.

- Process a known number of tasks concurrently for better resource management: Async queues allow you to manage resources efficiently by specifying the number of tasks processed concurrently. This capability is particularly useful when dealing with limited resources or when you want to strike a balance between maximizing throughput and preventing resource exhaustion. It enables fine-tuning the workload to match the available resources, optimizing overall system performance.

- Execute tasks sequentially if order is important: In scenarios where task order is crucial, async queues provide a structured approach to executing tasks sequentially. Tasks enter the queue in the order they are received, ensuring that they are processed in a deterministic and organized manner. This sequential execution is vital for scenarios where maintaining task order is essential for the integrity of the overall process, such as in financial transactions or data processing pipelines.

Async queues offer a flexible and efficient mechanism for task management, allowing you to balance concurrency, prevent overload, and maintain the desired order of execution. Whether you’re handling a high volume of requests or ensuring the integrity of sequential processes, leveraging async queues contributes to a more robust and scalable system architecture.

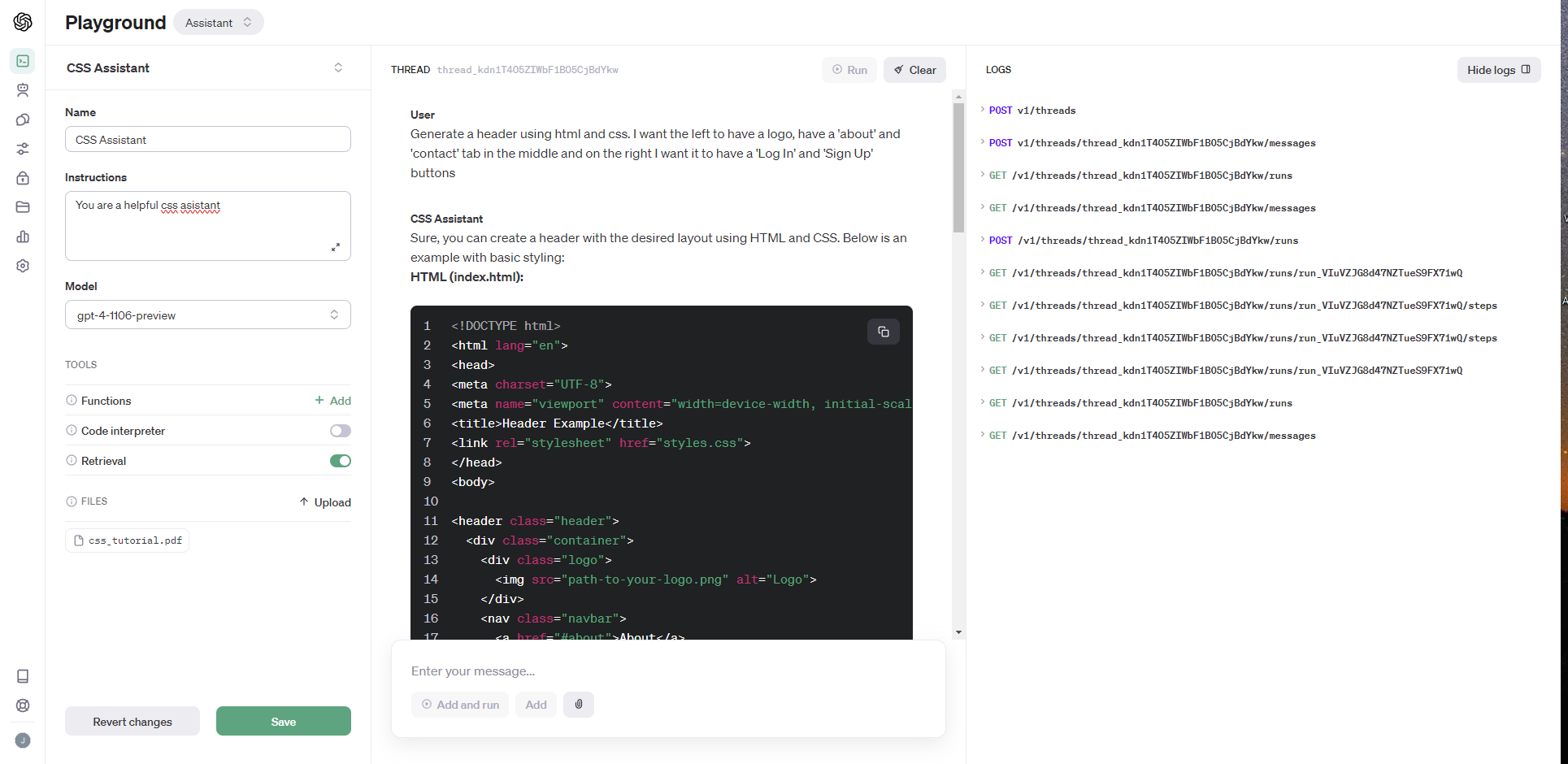

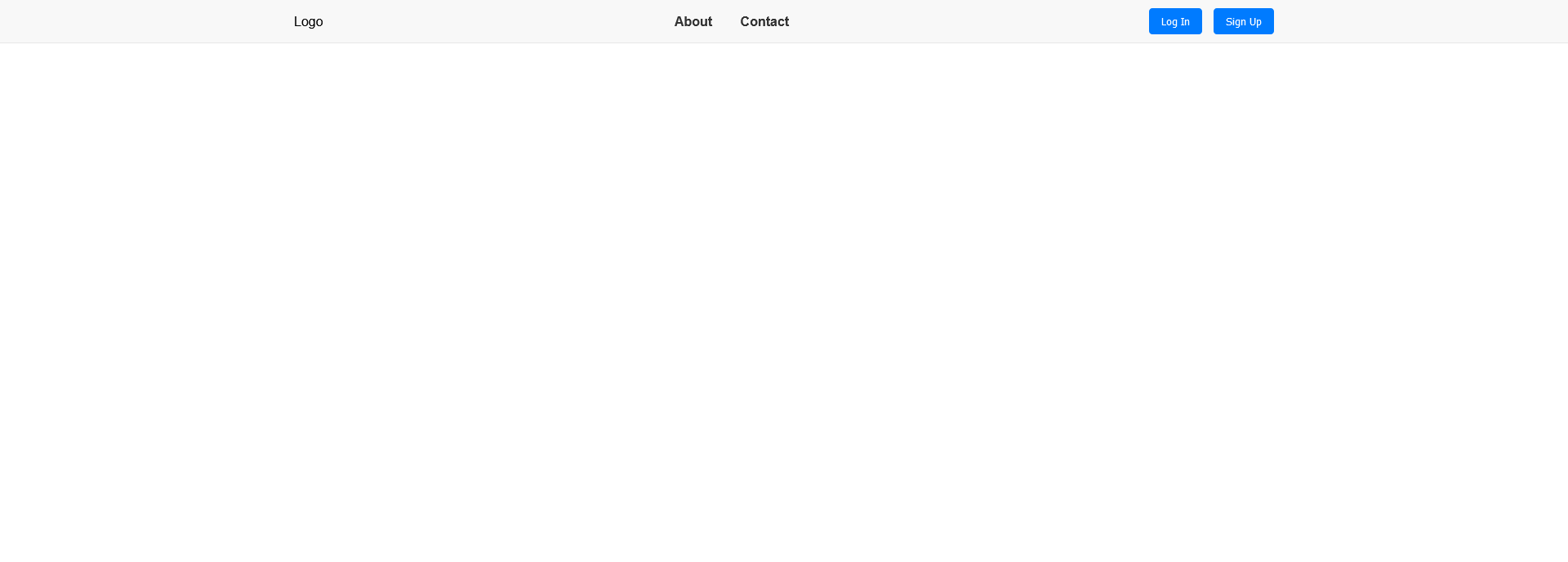

Creating a Basic Async Queue

class AsyncQueue {

constructor(concurrencyLimit) {

this.queue = [];

this.concurrentTasks = 0;

this.concurrencyLimit = concurrencyLimit;

}

// Method to add tasks to the queue

enqueue(task) {

this.queue.push(task);

this.processQueue();

}

// Method to get the next task from the queue

dequeue() {

return this.queue.shift();

}

// Internal method to process tasks from the queue

processQueue() {

while (this.concurrentTasks < this.concurrencyLimit && this.queue.length > 0) {

const task = this.dequeue();

this.executeTask(task);

}

}

// Internal method to execute a task

executeTask(task) {

this.concurrentTasks++;

// Simulate an asynchronous task (you would replace this with your actual task logic)

setTimeout(() => {

console.log(`Task "${task}" completed`);

this.concurrentTasks--;

// Check if there are more tasks in the queue

if (this.queue.length > 0) {

this.processQueue();

}

}, Math.random() * 1000); // Simulating variable task execution time

}

}

// Example usage:

const asyncQueue = new AsyncQueue(2); // Set concurrency limit to 2

asyncQueue.enqueue('Task 1');

asyncQueue.enqueue('Task 2');

asyncQueue.enqueue('Task 3');

asyncQueue.enqueue('Task 4');

What we did.

- Initialize a queue array to store tasks

- Write an

enqueuemethod to add tasks - Write a

dequeuemethod to get the next task - Maintain a concurrency limit variable to control how many tasks execute at once

Handling Errors

Effective error handling is a critical aspect of building robust systems. When working with an asynchronous queue, it’s essential to implement strategies that gracefully handle errors and maintain the integrity of the queue. Here are key practices for handling errors in an async queue:

Wrap tasks in the queue with error handling

To fortify your async queue against potential errors, encapsulate the execution logic of each task within a try-catch block. This ensures that any errors occurring during task execution are caught and handled appropriately, preventing them from disrupting the overall operation of the queue.

// Modify the executeTask method in the AsyncQueue class

executeTask(task) {

this.concurrentTasks++;

try {

// Task execution logic goes here

// Simulated error for demonstration purposes

if (Math.random() < 0.3) {

throw new Error('Simulated error during task execution');

}

// Successful execution

console.log(`Task "${task}" completed`);

} catch (error) {

console.error(`Error executing task "${task}":`, error.message);

// Optionally, emit events or call callbacks to notify of errors

} finally {

this.concurrentTasks--;

// Check if there are more tasks in the queue

if (this.queue.length > 0) {

this.processQueue();

}

}

}

On error, remove task from the queue

If an error occurs during the execution of a task, it’s prudent to remove that task from the queue to prevent it from being retried unnecessarily. Adjust the error handling logic to remove the task from the queue upon encountering an error.

// Modify the executeTask method to remove task on error

executeTask(task) {

this.concurrentTasks++;

try {

// Task execution logic goes here

// Simulated error for demonstration purposes

if (Math.random() < 0.3) {

throw new Error('Simulated error during task execution');

}

// Successful execution

console.log(`Task "${task}" completed`);

} catch (error) {

console.error(`Error executing task "${task}":`, error.message);

// Remove the task from the queue on error

this.queue.shift();

// Optionally, emit events or call callbacks to notify of errors

} finally {

this.concurrentTasks--;

// Check if there are more tasks in the queue

if (this.queue.length > 0) {

this.processQueue();

}

}

}

Emit events or call callbacks to notify of errors

Beyond logging errors, consider implementing a mechanism to notify other parts of your application about errors. This could involve emitting events or calling callbacks, allowing you to integrate error handling into your broader error reporting or monitoring system.

// Modify the executeTask method to emit events or call callbacks on error

executeTask(task) {

this.concurrentTasks++;

try {

// Task execution logic goes here

// Simulated error for demonstration purposes

if (Math.random() < 0.3) {

throw new Error('Simulated error during task execution');

}

// Successful execution

console.log(`Task "${task}" completed`);

} catch (error) {

console.error(`Error executing task "${task}":`, error.message);

// Remove the task from the queue on error

this.queue.shift();

// Emit events or call callbacks to notify of errors

this.notifyErrorListeners(task, error);

} finally {

this.concurrentTasks--;

// Check if there are more tasks in the queue

if (this.queue.length > 0) {

this.processQueue();

}

}

}

// New method to notify error listeners

notifyErrorListeners(task, error) {

// Implement your logic to emit events or call callbacks here

// For example:

// this.emit('taskError', { task, error });

}

By incorporating these error-handling practices, your asynchronous queue becomes more resilient, providing mechanisms to gracefully handle errors, remove problematic tasks, and notify relevant parts of your application about encountered issues.

Advanced Async Queue Considerations

Cancellation Tokens

Cancellation tokens provide a mechanism to gracefully interrupt or cancel the execution of tasks within an asynchronous queue. This feature is particularly valuable in scenarios where tasks need to be aborted due to external conditions or changes in system requirements. By incorporating cancellation tokens, you enhance the flexibility and responsiveness of your async queue, allowing for more dynamic control over task execution.

Priority Levels

Introducing priority levels to your async queue enables the prioritization of certain tasks over others. This can be crucial in situations where different tasks have varying degrees of importance or urgency. By assigning priority levels to tasks, you can ensure that high-priority tasks are processed ahead of lower-priority ones, optimizing the overall efficiency and responsiveness of your system.

Queue Pausing

Queue pausing functionality provides a means to temporarily halt the processing of tasks in the async queue. This feature is beneficial in scenarios where you need to freeze the execution of tasks for a specific duration, perhaps to perform maintenance or address unexpected issues. Pausing the queue allows you to control the flow of tasks without interrupting the overall functionality of the system.

Exponential Backoff for Retries

Implementing exponential backoff for retries enhances the resilience of your async queue by introducing an intelligent delay mechanism. When a task encounters an error, rather than immediately retrying, exponential backoff involves progressively increasing the time between retries. This approach helps prevent overwhelming systems during transient failures and improves the chances of successful task execution upon subsequent attempts.

These advanced considerations contribute to a more sophisticated and adaptable asynchronous queue system, capable of handling a broader range of scenarios and aligning with specific requirements of complex applications. Depending on your use case, integrating these features can significantly enhance the robustness and efficiency of your async queue implementation.

Recap & Summary

All in all, we’ve explored the fundamental concepts of creating a basic asynchronous queue in JavaScript. Here’s a recap of the key elements covered:

- Async Queue Basics:

- Initialized a queue array to store tasks.

- Implemented an enqueue method to add tasks to the queue.

- Created a dequeue method to retrieve the next task.

- Maintained a concurrency limit variable to control how many tasks execute at once.

- Handling Errors:

- Wrapped tasks in the queue with error handling using try-catch blocks.

- Removed tasks from the queue on error to prevent unnecessary retries.

- Considered emitting events or calling callbacks to notify of errors.

- Advanced Async Queue Considerations:

- Cancellation Tokens:

- Provided a mechanism to gracefully interrupt or cancel task execution.

- Priority Levels:

- Introduced priority levels to prioritize tasks based on importance or urgency.

- Queue Pausing:

- Implemented the ability to pause the queue temporarily for maintenance or issue resolution.

- Exponential Backoff for Retries:

- Enhanced resilience by introducing intelligent delays between retry attempts.

- Cancellation Tokens:

These advanced considerations elevate the async queue to a more sophisticated level, addressing scenarios such as dynamic task cancellation, prioritization, controlled pausing, and resilient retry strategies.

By combining these principles, you can build a versatile and robust async queue tailored to the specific requirements of your application. Whether you’re optimizing resource management, handling errors gracefully, or introducing advanced features, a well-designed async queue is a powerful tool for managing asynchronous tasks efficiently.